How do you manage your applications & IT infrastructure?

How do you provision, configure, manage, and scale up various elements on-demand for a large-scale IT infrastructure made up of networks, databases, servers, storage, operating systems, and other elements?

Traditionally a dedicated team of system administrators, specialists, manually performed the tasks as and when the need arose. Resource provisioning is a complex task and Agility, flexibility, and Cost-Effectiveness all came at the cost of each other.

Infrastructure as Code is a perfect solution to these challenges. IaC enables enterprise to automate infrastructure provisioning and scaling, which accelerates the speed at which cloud applications are developed, deployed, and scaled at a reduced cost.

What is Infrastructure-as-Code (IaC) and How Does it Work?

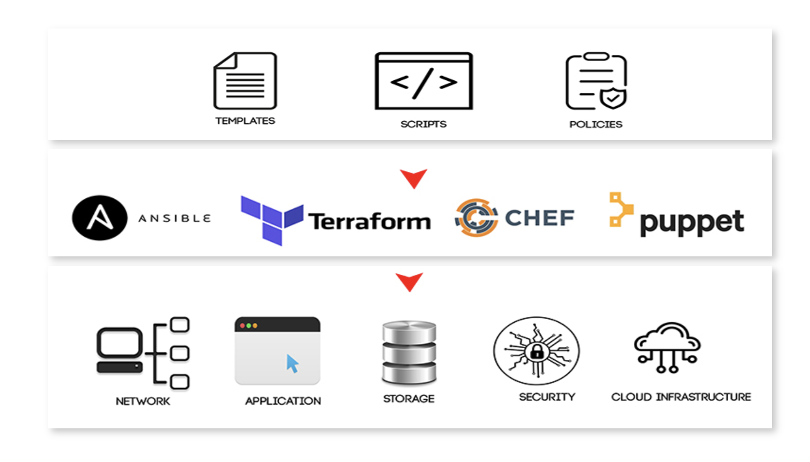

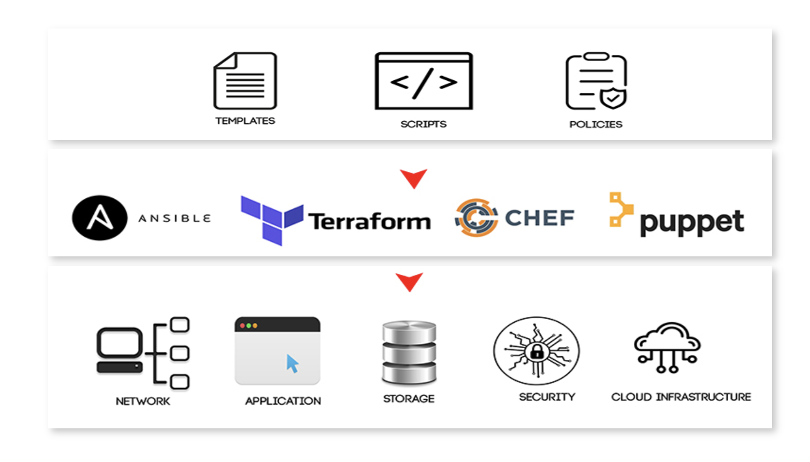

Infrastructure as Code uses machine-readable definition files (aka templates) that use high-level descriptive coding language to automate IT infrastructure provisioning. Human intervention is minimized and developers can focus on the application development and deployment rather than its resource needs.

IaC borrows software development lifecycle practices to automate resource provisioning. When there are changes in resource allocation and provisioning strategies, the changes are made to the definition files and rolled out to systems through unattended processes, after thorough validation.

So, humans do not manually provision or configure the resources. They do not set up new hardware or software systems to support their applications. Everything happens at the code level.

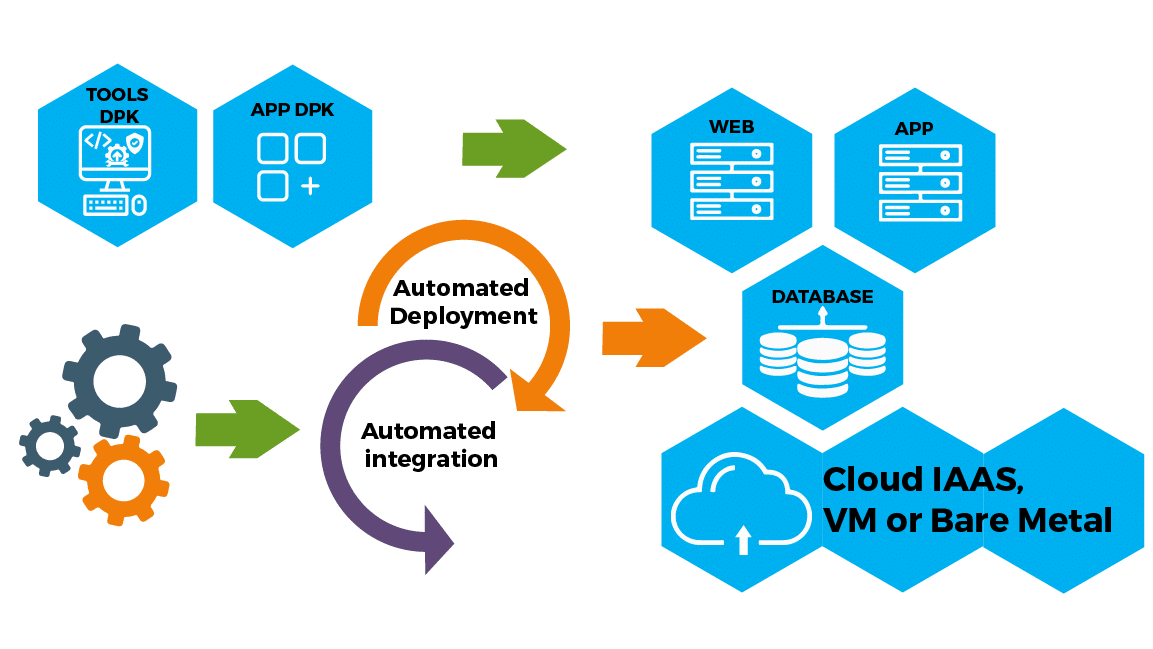

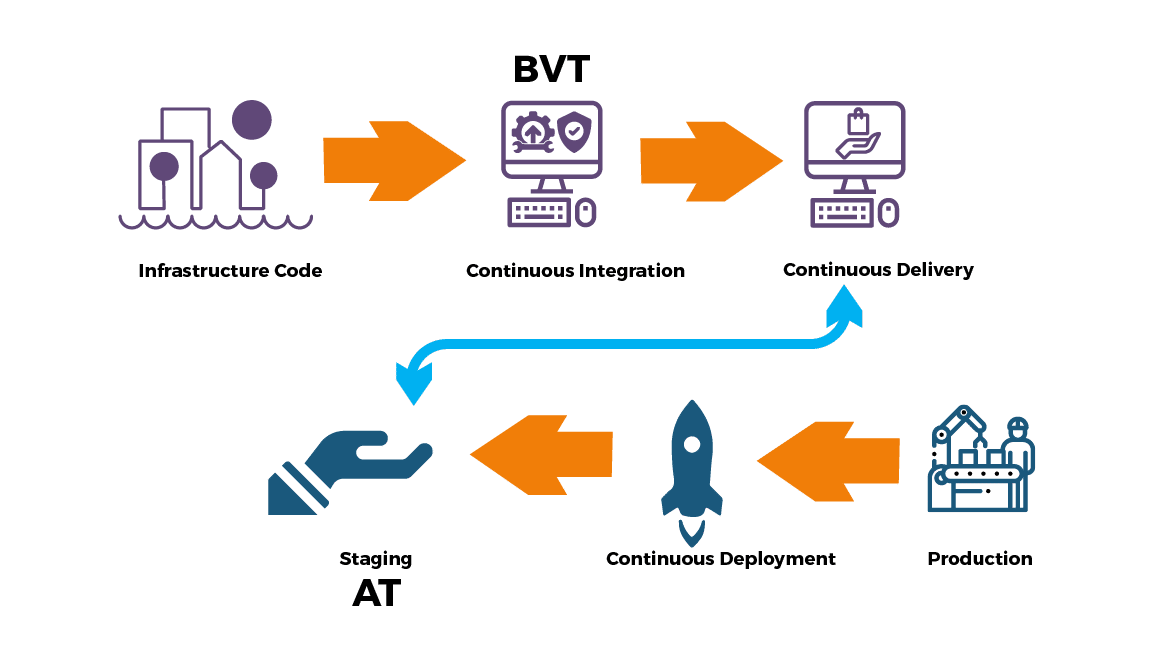

Infrastructure-as-Code (IaC) Workflow

Benefits of Infrastructure-as-Code (IaC)

The benefits of IaC are intuitive and precisely what you'd imagine them to be:

1. Lower Costs

You don't have to hire a team of professionals to routinely manually manage resource provisioning, configuration, troubleshooting, hardware setup, and so on. It saves time and money.

2. Speedy Provisioning

IaC automates resource provisioning across environments, from development to deployment, by simply running a script. It drastically accelerates the software development life-cycle and makes your organization more responsive to external challenges.

3. Consistency

People commit mistakes. That's a fact. No matter how well you communicate and how much effort your team puts in, they are bound to make errors – several of them, in fact. In the case of IaC, the definition files are a single source of truth. There's never any confusion about what they do. You execute them repeatedly and get predictable results every time.

4. Accountability

When you need to trace changes to definition files, you can do it with ease. They are versioned, and therefore all changes are recorded for your review at a later point. So, once again, there's never any confusion on who did what.

5. Resilience

Resource provisioning and configuration is often a labor-intensive and skill-intensive task, usually handled by skilled resources. When one or more of them leave the organization, they take their knowledge with them. With IaC, resource provisioning intelligence remains with the organization in the form of definition files.

5 Challenges of Infrastructure-as-Code (IaC)

Every new solution comes with a new set of problems, and IaC is no different. The very things that make IaC so powerful and efficient also present some unique challenges to the organizations. Here's a brief overview of them:

1. Accidental Destruction

In theory, once you get automated systems operational, they do not need constant management beyond the periodic fix or replace tasks. However, in reality, even automated systems encounter problems, and these problems accumulate over time into massive system-level disasters. In technical parlance, it's also called erosion.

2. Configuration Drift

Automated configuration often leads to the drifting of infrastructure elements over time. For instance, a fix introduced to one server may not be replicated on all servers. Although differences aren't always bad, it's essential to document and manage them.

3. Lack of Expertise

Creating definition files and testing them to ensure that they work flawlessly requires an in-depth knowledge of all the elements that comprise the organization's IT infrastructure. That's a rare set of skills.

4. Lack of Proper Design and Planning

Automation initiatives involve many unknowns, and it is vital to identify them and address them in the planning stage. Persistent testing and staggered implementation of automation projects can give skeptic business leaders the knowledge and confidence they need to helm more automation projects in the future.

5. Error Replication

In manual processes, it's easy to track human actions and replicate errors. With automation involved, error replication becomes an arduous task. Analyzing log files, workflows, and other data may not help system administrators recreate the error conditions accurately.

5 Principles of Infrastructure-as-Code (IaC)

The following principles are designed to help you maximize your ROI from your IaC strategy without falling into common pitfalls.

1. Reproduce Systems Easily

Your IaC strategy should help you build and rebuild any element of your IT infrastructure with ease and speed. They should not require significant human effort or complex decision-making.

All the tasks involved – from choosing the software to be installed to its configuration – must be coded into the definition files. The scripts and the tools that manage resource provisioning should have the information to perform their tasks without human intervention.

2. Idempotence

Meticulous business leaders are naturally skeptical of automated systems and their ability to perform complex tasks. Therefore, IaC must offer consistency, no matter how many times it is executed. For instance, when new servers are added, they must be identical (at least near-identical) to the current servers in capacity, performance, and reliability. This way, whenever new infrastructure elements are added, all decisions from configuration to hosting names are automated and predetermined.

Of course, some level of configuration drift will creep into the system with time, but that must be recorded and managed.

3. Repeatable Processes

System administrators have a natural affinity towards intuitive tasks. When resource allocation is imminent, they prefer to do it the most intuitive way – assess the resource requirements, determine the best practices, and provision resources.

Although effective, such a process is counter-productive to automation. IaC demands that the system administrators think in scripts. Their tasks must be broken down or clubbed together into repeatable processes which can be codified in scripts.

To be fair, this method introduces some level of rigidness into the system. If one server needs an additional partition of 40GB and another needs 80GB, IaC principles dictate that an overarching script that allocates same-size partitions to the servers be executed. In this case, 80GB partition would be the ideal choice.

4. Disposable Systems

IaC acutely depends on reliable and resilient software to make hardware reliability irrelevant to system operations. In the cloud era, where the underlying hardware may or may not be reliable, organizations cannot let hardware failures disrupt their businesses. Therefore, software-level resource provisioning ensures that hardware failure situations are immediately responded with alternate hardware allocation so that IT operations remain uninterrupted.

Dynamic infrastructure that can be created, destroyed, resized, moved, and replaced sit at the core of IaC. It should handle infrastructure changes like resizing and expansions gracefully.

5. Ever-evolving Design

The IT infrastructure design keeps changing to accommodate the evolving needs of the organization. Because infrastructure changes are expensive, organizations try to limit them by meticulously predicting future requirements with accuracy and then design the systems accordingly. These overly complex designs further make future changes even more difficult and therefore, more expensive.

IaC-driven cloud infrastructure tackles this problem by simplifying change management. While the current systems are designed to meet current requirements, future changes must be easy to implement. The only way to ensure that change management is easy and quick is by making changes frequently so that all stakeholders are aware of the common issues and create scripts that overcome the respective issues effectively.

Conclusion

IaC, when implemented correctly, brings down manual tasks of critical employees like software developers and lets them focus on DevOps delivery by working on continuous improvements instead. IaC eliminates infrastructure bottlenecks to a large extent and reduces infrastructure provisioning to self-service.

For the new age businesses that need to be agile, flexible, and responsive to market challenges, IaC is a perfect solution.

Market Growth and ScaleThe global BPO market experienced steady expansion during this decade, fueled by globalization, economic recovery post-2008 financial crisis, and corporations' ongoing pursuit of efficiency.

Market Growth and ScaleThe global BPO market experienced steady expansion during this decade, fueled by globalization, economic recovery post-2008 financial crisis, and corporations' ongoing pursuit of efficiency.